The send/recv pattern

Our first introduction to a distributed operation

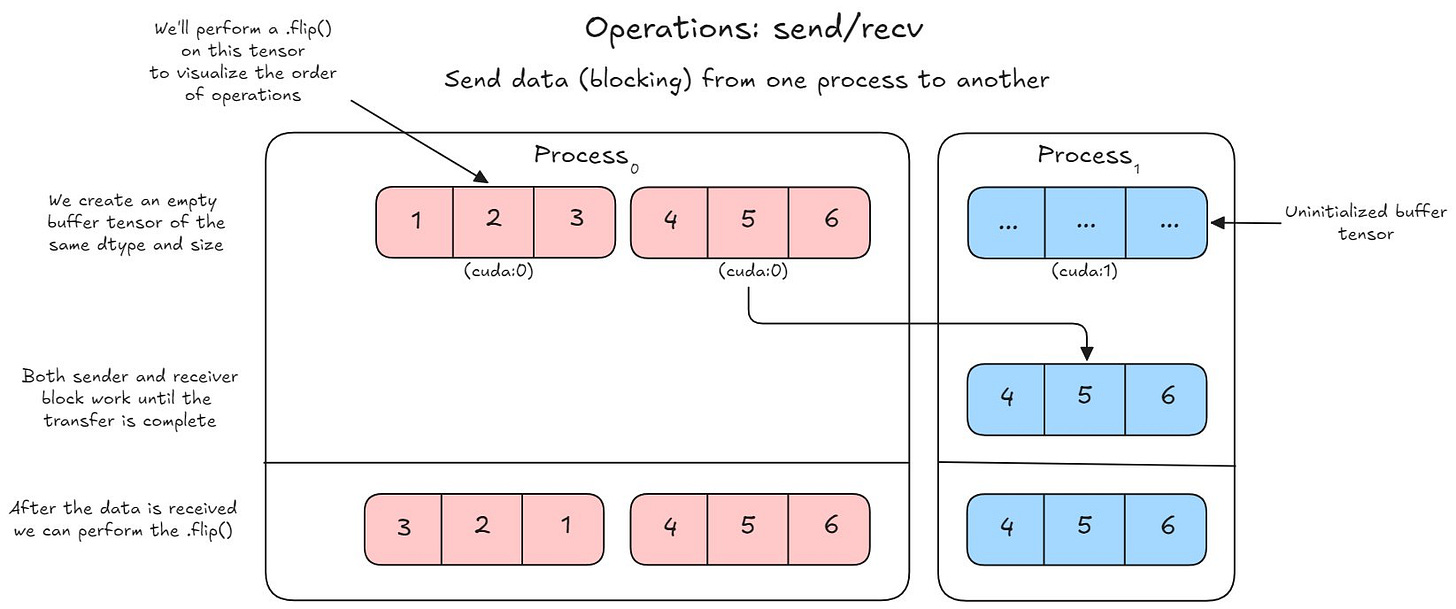

send/recv is a core concept behind distributed PyTorch operations. The general idea revolves around how do you send a tensor from device A to device B.

This paradigm does so in a blocking fashion, meaning no work can get done until the transaction has completed. We do this by first creating an empty tensor that will receive our values (they can have values that will just be overwritten, but they must be the same shape and dtype as the sent tensor).

Then the sending process will do .send() to the receiving process. During this time no work can be completed until after this handshake is completed.

Finally, after it’s done, work can continue. Such as what you see below with a tensor.flip() operation.

On November 1st starts the second cohort of Scratch to Scale! Come learn the major tricks and algorithms used when single-GPU training hits failure points. Also get access to the prior cohort’s guest speakers too! (And get in for 35% off)