Distributed Operations: The Reduce Op

Getting data from all processes to one

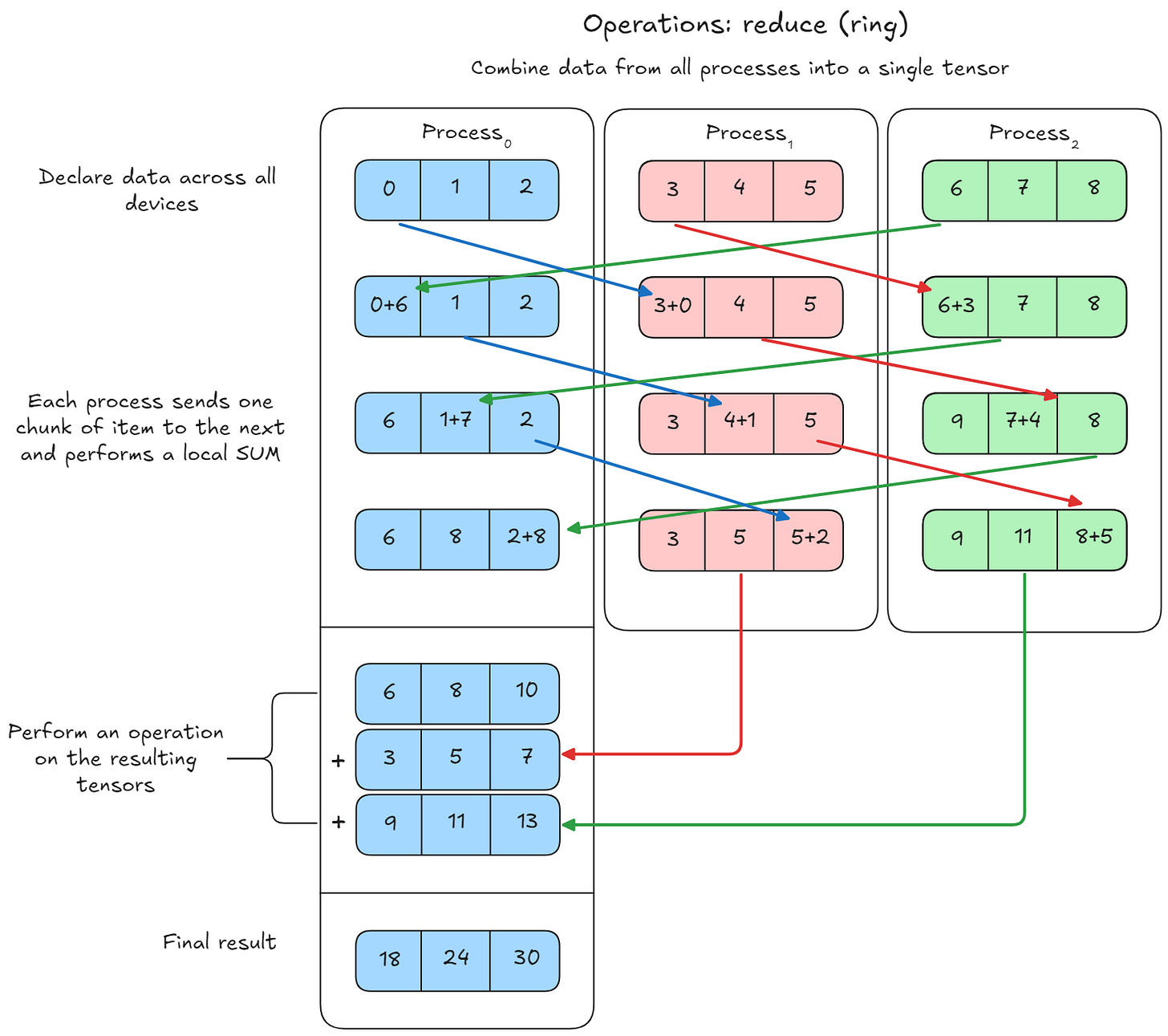

Ring-reduce (torch’s default reduce operation) is a bandwidth-friendly way to add a large tensor that is split across several GPUs or machines.

Imagine the workers (gpus) are arranged in a circle:

1. Each worker starts with its own slice of the data.

2. In every “round” it sends one slice to its right-hand neighbor and receives a slice from the left.

3. The arriving slice is added to the local copy of that slice.

4. After N – 1 rounds (N = number of workers) every slice has traveled once around the ring and been summed once on every worker.

5. A second ring phase (often called the “all-gather”) now ships the finished slices the rest of the way around so that, at the end, every worker has the complete, globally-summed tensor.

Because each worker sends and receives only one chunk at a time, the total communication volume is 2 × (N – 1) chunks, much lower than naive broadcasts, and the peak bandwidth per link stays constant no matter how many workers you add